01:00

Wrong By Design

STAT 20: Introduction to Probability and Statistics

Agenda

- Concept Questions

- Problem Set 15

Concept Questions

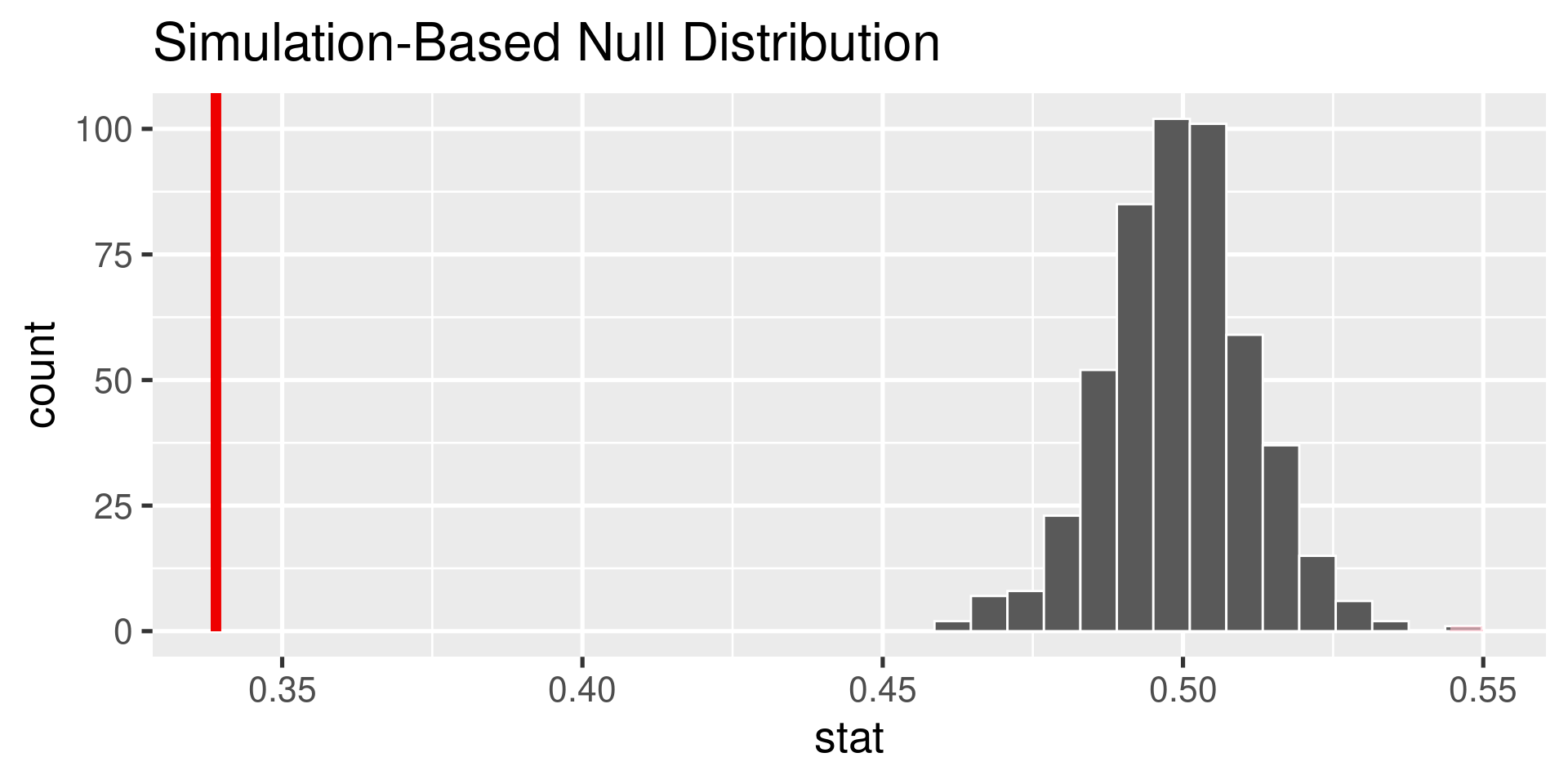

Instead of constructing a confidence interval to learn about the parameter, we could assert the value of a parameter and see whether it is consistent with the data using a hypothesis test. Say you are interested in testing whether there is a clear majority opinion of support or opposition to the project.

What are the null and alternative hypotheses?

library(tidyverse)

library(infer)

library(stat20data)

ppk <- ppk |>

mutate(support_before = Q18_words %in% c("Somewhat support",

"Strongly support",

"Very strongly support"))

obs_stat <- ppk |>

specify(response = support_before,

success = "TRUE") |>

calculate(stat = "prop")

obs_statResponse: support_before (factor)

# A tibble: 1 × 1

stat

<dbl>

1 0.339null <- ppk |>

specify(response = support_before,

success = "TRUE") |>

hypothesize(null = "point", p = .5) |>

generate(reps = 500, type = "draw") |>

calculate(stat = "prop")

nullResponse: support_before (factor)

Null Hypothesis: point

# A tibble: 500 × 2

replicate stat

<fct> <dbl>

1 1 0.516

2 2 0.5

3 3 0.481

4 4 0.504

5 5 0.498

6 6 0.493

7 7 0.493

8 8 0.492

9 9 0.501

10 10 0.489

# ℹ 490 more rows

What would a Type I error be in this context?

01:00

What would a Type II error be in this context?

Problem Set 15

30:00